AI gone wild

AI is increasingly being perverted to create non-consensual adult material, and even CSAM content. Most model owners "try" to gate it, but one seems to flaunt all common sense restictions. Additionally, the predictions about AI at the beginning of 2025 turn out to be dead wrong.

As I am a tech head, I find that I have to pay attention to some skeevy things, and one that has arisen lately is the commodification of AI image manipulation, and that coupled with the fucking complete lack of any guardrails at xAI (twitter) is a giant shit-show.

Grok (the xAI agent on Twitter) is being used to create CSAM and skeevy things by users saying simply as a comment "Grok, put her in a bikini" or "grok, spread her legs" or any other fucked up thing.

I read about this in a few places, but Matteo Wong at The Atlantic writes about this.

(Gifted link: Elon Musk's Pornography Machine totally worth the read)

Earlier this week, some people on X began replying to photos with a very specific kind of request. “Put her in a bikini,” “take her dress off,” “spread her legs,” and so on, they commanded Grok, the platform’s built-in chatbot. Again and again, the bot complied, using photos of real people—celebrities and noncelebrities, including some who appear to be young children—and putting them in bikinis, revealing underwear, or sexual poses. By one estimate, Grok generated one nonconsensual sexual image every minute in a roughly 24-hour stretch.

Although the reach of these posts is hard to measure, some have been liked thousands of times. X appears to have removed a number of these images and suspended at least one user who asked for them, but many, many of them are still visible. xAI, the Elon Musk–owned company that develops Grok, prohibits the sexualization of children in its acceptable-use policy; neither the safety nor child-safety teams at the company responded to a detailed request for comment.

Gee no surprises there. While AI being used to make nonconsensual porn since at least 2017, now it is mainstream. And to be frank, the main model makers have (mostly) good about blocking the egregious requests, there have been models out there that skirt the "respectability" angle for a long time.

The problem here is that xAI is one of the foundation models, and coupled with Twitter (I will deadname this until I die) they are built to be as permissive as possible, and that includes for sex. I mean for fuck's sake, Musk was touting the use of Grok in "waifu" or girlfriend mode (now I need to take a looooong hot shower).

I mean:

Grok and X appear purpose-built to be as sexually permissive as possible. In August, xAI launched an image-generating feature, called Grok Imagine, with a “spicy” mode that was reportedly used to generate topless videos of Taylor Swift. Around the same time, xAI launched “Companions” in Grok: animated personas that, in many instances, seem explicitly designed for romantic and erotic interactions. One of the first Grok Companions, “Ani,” wears a lacy black dress and blows kisses through the screen, sometimes asking, “You like what you see?” Musk promoted this feature by posting on X that “Ani will make ur buffer overflow @Grok 😘.”

Ick to the max right there.

As if you need another reason to strap Musk to one of his Falcon Heavies and fire his larded ass into the sun:

Grok seems to be unique among major chatbots in its permissive stance and apparent holes in safeguards. There aren’t widespread reports of ChatGPT or Gemini, for example, producing sexually suggestive images of young girls (or, for that matter, praising the Holocaust). But the AI industry does have broader problems with nonconsensual porn and CSAM. Over the past couple of years, a number of child-safety organizations and agencies have been tracking a skyrocketing amount of AI-generated, nonconsensual images and videos, many of which depict children. Plenty of erotic images are in major AI-training data sets, and in 2023 one of the largest public image data sets for AI training was found to contain hundreds of instances of suspected CSAM, which were eventually removed—meaning these models are technically capable of generating such imagery themselves.

Ugh. When you scrape the internet, you are gonna sweep up a lot of horrible shit, and regardless of how you tune your models, someone is gonna figure out how to coax the models to regurgitate some truly horrible garbage.

Bonus - AI is not really replacing people

At least, it isn't really able to replace people in the workforce, but that isn't stopping executive and senior leaders from using AI as an excuse to trim staff and to not hire.

Alas, coupled with the wiping out of the middle of the workforce, written about here:

also, in a section of this omnibus post, I talked about how AI was eliminating the entry into the career for a lot of people:

My own company, our CTO stated in early 2025 that by the end of the year, 70% of our code would be written by AI. I doubt we got there (for the record, I am not in engineering, so I can't gauge), but from talking to some peers, it is clear that AI isn't good enough yet to do that.

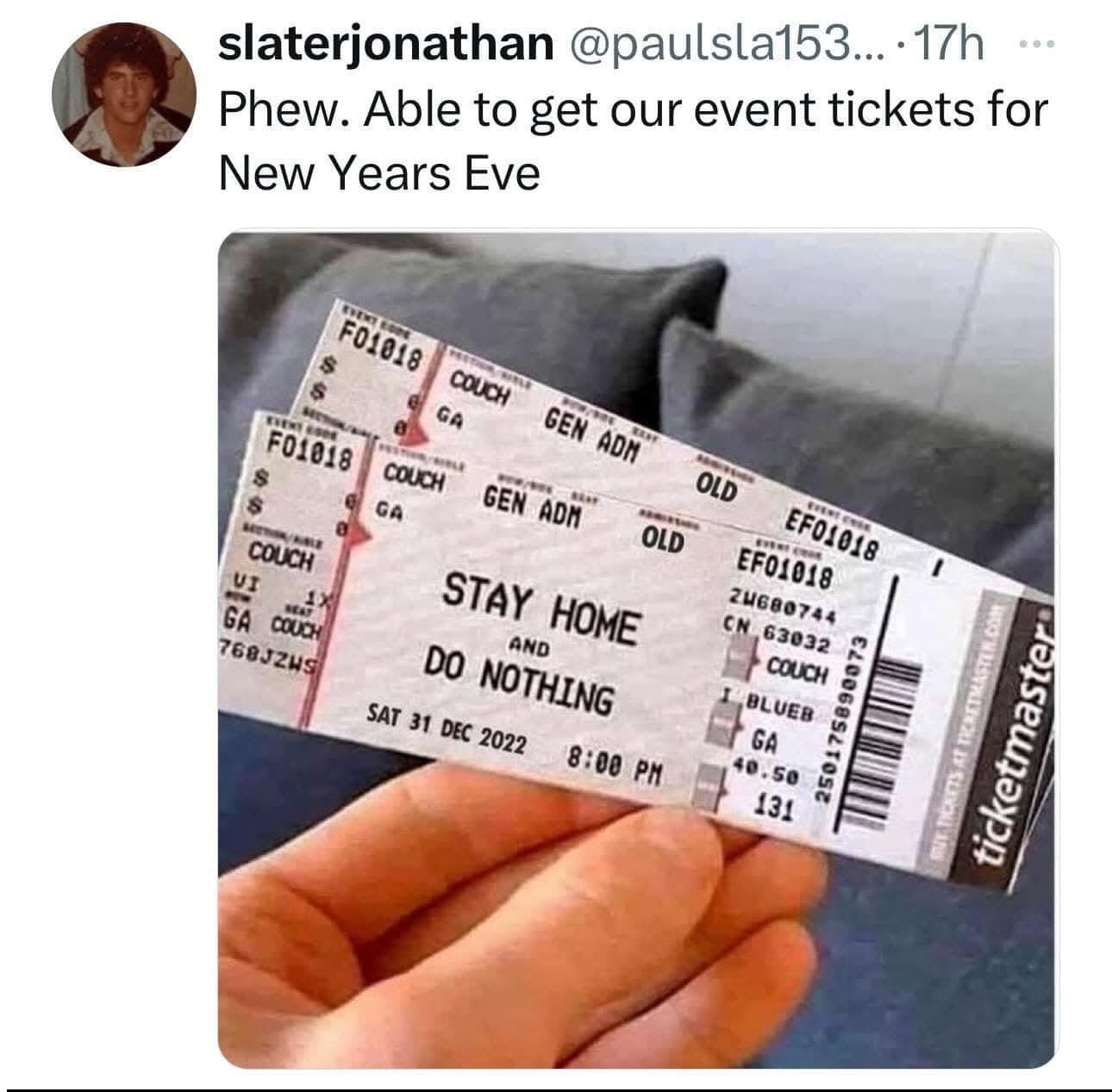

Turns out that while AI isn't replacing a lot of staff directly, it is clearly the excuse du jour for executive teams to trim staff. Yale did a survey of the CEOs of the largest companies, and not one was planning on increasing staff, about a quarter of them will reduce headcount, and the rest will remain "flat" for 2026. So much for Trump's "Hottest economy evarrrrr!"

I do recommend spending the time on this video, worth the watch: